This is day 13 of "One CSV, 30 stories":http://blog.whatfettle.com/2014/10/13/one-csv-thirty-stories/ a series of articles exploring "price paid data":https://www.gov.uk/government/statistical-data-sets/price-paid-data-downloads from the Land Registry found on GOV.UK. The code for this and the other articles is available as open source from "GitHub":https://github.com/psd/price-paid-data

"Yesterday":http://blog.whatfettle.com/2014/11/04/one-csv-thirty-stories-12-stacked/ I decided to switch from trends in prices to looking into where all the houses listed inside the CSV are located.

The price paid data is a series of transactions, with a postal address for each transaction. Here are the first few addresses:

bc. $ awk -F'⋯' '{print $7,$8,$9,$10,$11,$12,$13,$3 }' pp.tsv | head

106 READING ROAD NORTHOLT NORTHOLT EALING GREATER LONDON UB5 4PJ

58 ADAMS MEADOW ILMINSTER ILMINSTER SOUTH SOMERSET SOMERSET TA19 9DD

58 WHELLOCK ROAD LONDON EALING GREATER LONDON W4 1DZ

17 WESTGATE MORPETH MORPETH CASTLE MORPETH NORTHUMBERLAND NE61 2BH

4 MASON GARDENS WEST WINCH KING'S LYNN KING'S LYNN AND WEST NORFOLK NORFOLK PE33 0RU

5 WILD FLOWER WAY DITCHINGHAM BUNGAY SOUTH NORFOLK NORFOLK NR35 2SF

17 OAKWOOD DRIVE CLYDACH SWANSEA NEATH PORT TALBOT NEATH PORT TALBOT SA8 4DG

11 WATER LANE BRISLINGTON BRISTOL CITY OF BRISTOL CITY OF BRISTOL BS4 5AW

148 HIGH STREET MARSHFIELD CHIPPENHAM SOUTH GLOUCESTERSHIRE SOUTH GLOUCESTERSHIRE SN14 8LU

34 WELLAND CRESCENT ELSECAR BARNSLEY BARNSLEY SOUTH YORKSHIRE S74 8AG

We can count how many unique addresses there are in the CSV with a spot of awk:

$ awk -F'⋯' '{print $7,$8,$9,$10,$11,$12,$13,$3 }' pp.tsv | sort -u | wc -l

13660622

Which tells us 13,660,622, or about 70% of all property transactions over the past 20 years have been recorded with a unique address.

The address for a property may legitimately change with changes to the region and district names and boundary changes, road names change for a number of reasons, often as acts of "Bowlderisation":http://en.wikipedia.org/wiki/Gropecunt_Lane and even the postcode, an abstract identifier with the purpose of identifying a location, may change or possibly be reused over time. Add to that inconsistencies in how the address is formatted, and it becomes quite difficult to relate two different addresses to the same property or location.

To understand the history of prices paid for a property or a location we need:

# a unique reference for the property being sold, not just the postal address which may change

# the geographical location of each property

Neither of these things are a part of the Land Registry open data, and although the Land Registry releases "index polygons":https://www.gov.uk/government/collections/download-inspire-index-polygons as open data under the INSPIRE directive, there is no obvious way to correlate a polygon to the associated transactions in the price paid dataset.

The best we can do to try and disambiguate and locate a property is to turn its address into a geographical location using a "geocoder":http://en.wikipedia.org/wiki/Geocoding.

Unfortunately geocoding UK addresses has a few issues, namely the location of postcodes is held in the "Post Office Address File (PAF)":http://en.wikipedia.org/wiki/Postcode_Address_File from the Royal Mail. If I was minded to buy a licence to use the PAF from the Royal Mail I'd still have to match addresses from the dataset against those held in a different structure in the PAF and match addresses with changed street names or historical postcodes which may no longer be in the PAF.

Even if it was cheap and easy to obtain a copy of the PAF file and I overcame the address matching challenge, it would be still be difficult for me to make anything for anyone to use and freely build upon under the terms of the "PAF license":http://www.poweredbypaf.com/end-user/licensing/licensing-overview/ which are quite restrictive. I believe "making things open makes things better":https://www.gov.uk/design-principles#tenth, and to build an open project in the open with open source code you can learn from, build upon and easily contribute back to means using "open data":http://en.wikipedia.org/wiki/Open_data.

Long term things look hopeful for open address data. Only yesterday, the "Open Data Institute":http://theodi.org/ (ODI) announced how a "£383k government grant has been released to create UK open address list":http://theodi.org/news/383k-government-grant-released-to-create-uk-open-address-list. I would certainly bet on the amazing people who the ODI will attract to build an open, consumable, sustainable platform for address data, but that is unlikely to help me pinpoint our 19 million transactions on property within the next few weeks.

Our best bet with open data is to locate property by the postcode and use Ordinance Survey "OS OpenData™ Code-Point® Open":http://www.ordnancesurvey.co.uk/business-and-government/products/opendata-products.html dataset to locate the centre point of each postcode area. Nothing communicates "open data" like a registered name under trademarked branding, but even with what appears to be quite a lot of care and attention being paid to marketing it took me a while to realise I was expected to register my name, create an order, and then retrieve the file by following a link delivered by email.

Whilst I was waiting for the OS to reply to my order (it was lost in my SPAM folder) I remembered how the nice people at "My Society":http://mysociety.org have already shown them a better way by "republishing the OS open data":http://parlvid.mysociety.org/os/, including historical versions, under the terms of the "OS Open Data licence":http://www.ordnancesurvey.co.uk/business-and-government/licensing/using-creating-data-with-os-products/os-opendata.html.

The OS zipfile contains postcode information spread across lots of small CSV files, so we have to do some work to extract and then concatenate these files before they're useful to us:

bc. $ curl -s http://parlvid.mysociety.org/os/codepo_gb-2014-08.zip > codepo_gb.zip

$ unzip -o -d codepo_gb tmp/codepo_gb.zip

$ cat codepo_gb/Data/CSV/* |

sed -e 's/[⋯"]//g' -e 's/,/⋯/g' |

cut -d'⋯' -f1,3,4 |

sort > codepo_gb.tsv

bc. head -4 postcode.tsv

29913

280 TR8 4LX

274 CM21 9PF

266 B5 4TR

Thirty thousand (approximately 0.0015%) records don't have a postcode, which is a shame, but shouldn't be too much of a problem for a visualisation. Sorting the list of postcodes we prepared on "Day 2":http://blog.whatfettle.com/2014/10/15/one-csv-thirty-stories-2-counting-things/ and joining them with the OS data geocodes each of our postcodes:

bc. cat postcode.tsv |

sed 's/ //g' |

awk '{print $2 "⋯" $1}' |

sort > postcodes.tsv

join -t'⋯' codepo_gb.tsv postcodes.tsv > postcodes_os.tsv

giving lines of postcode, easting, northing and a count of how many times the postcode appears in the price paid data:

bc. $ head postcodes_os.tsv

AL100AB⋯522680⋯209765⋯23

AL100AD⋯522997⋯209812⋯2

AL100AH⋯522677⋯209836⋯9

AL100AJ⋯522757⋯209715⋯3

AL100AL⋯522693⋯209684⋯19

AL100AN⋯522902⋯209859⋯22

AL100AQ⋯522562⋯209797⋯10

AL100AT⋯523092⋯209243⋯75

AL100AU⋯522366⋯208852⋯12

AL100AW⋯522151⋯209037⋯1

bc. $ wc -l postcodes.tsv postcodes_os.tsv

1155780 postcodes.tsv

1150086 postcodes_os.tsv

2305866 total

Which means 0.5% of our postcodes don't appear in the OS OpenData™ Code-Point® Open dataset.

At this point I could translate the OS coordinates into "WGS84":http://en.wikipedia.org/wiki/World_Geodetic_System and put them onto a Google or open street map, but with 1.1 million points, who needs a map? We can push the eastings and northings as cartesian coordinates to imagemagick:

bc. width=7000

height=13000

radius=3

color=black

opacity=0.2

xmax=700000

ymax=1300000

cat <<-!

viewbox 0 0 $width $height fill transparent rectangle 0,0 $width $height

fill $color

fill-opacity $opacity

!

awk -F' ' \

-v width=$width \

-v height=$height \

-v radius=$radius \

-v xmax=$xmax \

-v ymax=$ymax \

{

x = width * $2 / xmax;

y = height - (height * $3 / ymax);

printf "circle %d,%d,%d,%d\n", x, y, x+radius, y+radius;

} |

convert mvg:- map.png

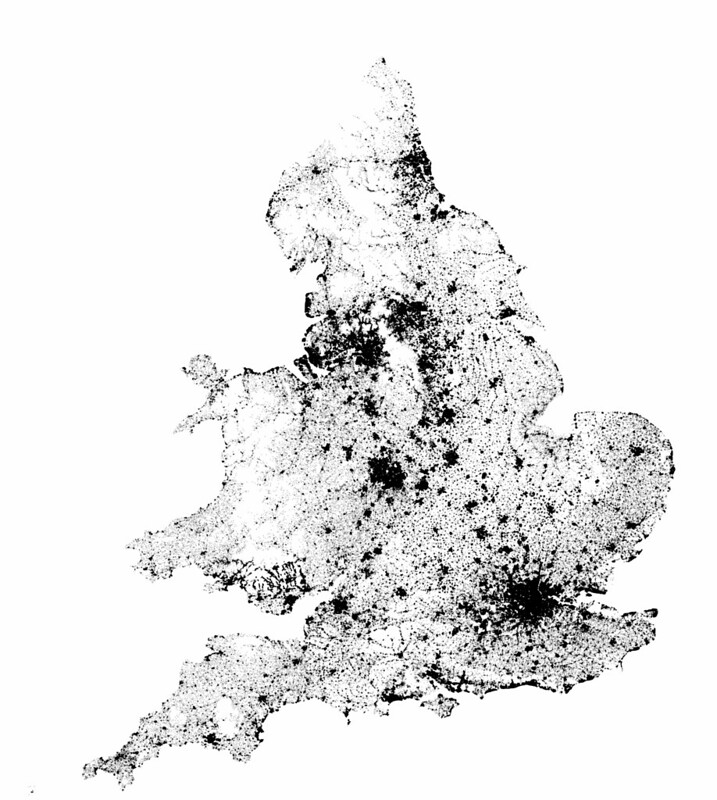

Revealing a map as a scatterplot:

This shows us how postcodes are clustered around towns and cities, and how the Land Registry operates for England and Wales only. Not very insightful, but enough for me on a busy day. Maybe "tomorrow":http://blog.whatfettle.com/2014/11/07/one-csv-thirty-stories-14-hackday/ we'll actually do something interesting with the data.

I wrote this whilst travelling to an internal "Land Registry Hackday":https://github.com/landregistry/hackday where I hope to make some art based on these experiments. Please help guide my hack and this series of blog posts by answering the questions in this "very short, anonymous survey":http://tinyurl.com/1csv-30stories-survey